Online Safety Act: Illegal Harms Code of Practice and Guidance

Publication, Takeaways and Next Steps

-

Illegal Harms Codes of practice and guidance published;

-

In-scope services need to complete Risk Assessments by 16 March 2025;

-

Take steps to mitigate against any harms present on the service in line with the safety duties under the OSA – Codes enforceable from 17 March 2025;

-

Be ready for Child Access Assessments Codes and Guidance to be published in Spring 2025.

On 16 December 2024, Ofcom published its Statement on Illegal Harms (the "Illegal Harms Codes"). Providers of regulated services must now assess the risk of illegal harms on their services with a deadline of 16 March 2025 and, subject to it completing the Parliamentary process, have effective safety measures in place from, at the latest, 17 March 2025. Ofcom has clearly signalled that services which deliberately or flagrantly fail to comply are likely to face swift regulatory action. Fortunately, there will be a digital tool to assist services in understanding how to comply with the duties under the Code. It will be divided into four steps that follow the risk assessment guidance.

The OSA lists over 130 priority offences which Ofcom has grouped into 17 broad groups. The Illegal Harms Codes set out how services should comply with their duties under the OSA to assess the risk of harm arising from illegal content (for a user to user (U2U) service) or activity on their service, and take proportionate steps to manage and mitigate against those risks.

Don't miss a thing, subscribe today!

Stay up to date by subscribing to the latest Public & Regulatory insights from the experts at Fieldfisher.

Chatbots and GenAI sites or apps are likely to be caught within the definitions of user-to-user services or search services (Open letter to UK online service providers regarding Generative AI and chatbots - Ofcom).

The Video Sharing Platform regime is currently out of scope of the Act but will eventually be brought into the regime and platforms would be well advised to take steps to prepare for regulation and compliance now (Repeal of the VSP regime: what you need to know - Ofcom).

The requirement to carry out risk assessment stems from section 9 of the OSA. Services will then need to:

- Decide on the appropriate online safety measures for their service to reduce risk of harm to individuals;

- Consider any additional measures that may be appropriate for them to impose in order to protect people using their service;

- Implement all associated safety measures;

- Record the outcomes of the risk assessment;

- Evaluate the effectiveness of the measures and keep records.

There are a number of actions which all services within scope will need to take where they identify any low, single or multi-risk of illegal harm on the platform through the risk assessment process which are helpfully set out in Ofcom's summary guidance.

- Governance and reporting: For all U2U and search services, risk assessment outcomes and online safety measures have to be reported to the service's most senior governance body (ICS and ICU A1). This means that there needs to be a senior accountable individual within the organisation responsible for illegal harms (ICS and ICU A2). However, for small services without formal boards or oversight teams, it will be sufficient to report to a senior manager with responsibility for online safety. Risk assessments should be kept in line with a services' document retention policy or three years, whichever is the longer.

- Training: staff should be provided with training about the requirements of the Act, reporting mechanisms and internal processes and governance structures. Although the requirement to provide compliance training doesn't apply to small low or single risk U2U services, such services should still consider whether to implement a programme to ensure staff understand the requirements of the Act.

- Moderation Content (U2U Services): The requirement to have a content moderation function that reviews assesses and has the ability for swift take down of illegal content applies to all types of services irrespective of risk. Search Moderation (Search services): All services must have a search moderation function designed to action illegal content (ICS C1).

- Complaints: All services are expected to enable complaints and have an easy to find, access and use complaints system and process. All services will also need to evidence that they take appropriate action for relevant complaints (as broken down at ICU D7, D10 – D13 (U2U Services); ICSD1, D2, D6, D9 – D12 (Search Services)).

- Terms of Service & Publicly available statements: All U2U services are expected to have terms of service which are accessible and clear (ICU G1 & G3) as are search services in relation to publicly available statements (ICSG1 and G3).

- User Access: All U2U services are expected to remove accounts of proscribed organisations (ICU H1). These are terrorist organisations proscribed by the UK government. Paras 2.108 and 2.109 set out the approach to identifying such accounts in practice including the name, profile images, bio text and potentially the type of content posted.

Notable changes between consultation and publication include:

- Keyword Detection: Removal of the requirement that services have to use to identify content that is likely to amount to a priority offence concerning articles for use in frauds. It acknowledges that there are likely to be more sophisticated measures and it anticipates a further consultation round on automated content moderation in Spring 2025.

- CSAM: Automated Hash Moderation: Applies to U2U services which are at high risk of image-based CSAM and have more than 700k UK monthly active users and all U2U services which are at high risk of image-based CSAM and are file storage and file sharing services.

- "Manifestly Unfounded" (Spam) Complaints: Provision for services to disregard "manifestly unfounded" complaints. There is a lot in this section for services considering how to incorporate it into policies and practice which merits review.

- Recommender Systems: Changes to the requirements to review and record the impact of changes where making "design adjustments" to recommender systems. This is distinct from "substantial changes" which would be caught by the requirement to update a risk assessment.

- Smaller, lower risk services: some changes to what these types of services are required to comply with due to concerns that the measures would have imposed a disproportionate regulatory burden.

- Offence Specific Guidance: A number of changes have been made to the offence specific guidance. This includes to fraud by false representation, intimate image abuse, sexual exploitation of adults, encouraging/assisting suicide and self-harm and cyberflashing.

All of the changes made between consultation and publication should be carefully reviewed to ensure services have understood the requirements of the Act and how they may impact on any preparations already carried out to assess the presence of risks on the platform and measures taken in response.

Effective governance is seen as a key mechanism by Ofcom for monitoring compliance with the duties under the OSA. Unlike in other areas of the Codes where a nuanced approach is taken depending on size of service and level of risk identified as likely to be present, all services are required to have a senior accountable individual within the organisation responsible for illegal harms and for reporting to take place to the services' most senior governance body. This approach chimes with the trend for online safety to move into a compliance function with clear tracking and monitoring of risks and the continual feedback loop as to how effective changes are.

Given the size of the penalties for failures to comply with the OSA, even if this approach wasn't required across the board, services would be well advised to pay close regard to the presence of illegal content on their platforms and ensure C-suite are fully briefed on issues and paying close attention to compliance and evaluating the effectiveness of measures taken in response. The challenge will be converting evaluations into easily translatable metrics so that services understand the risks, take informed decisions on actions and measures and discuss issues at an early stage before they materialise as a crisis.

Risk assessments are the beginning of the regulatory regime; implementation of measures and evaluation of their effectiveness is a crucial next step. Ofcom's has published a number of papers on evaluation of the effectiveness of online measures and, similar to the approach taken to VSP regulation, services are likely to find that it seeks engagement on a voluntary basis while it works out the optimum way to approach evaluation.

Looking Ahead

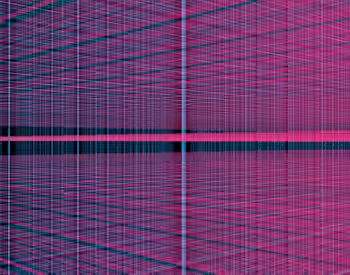

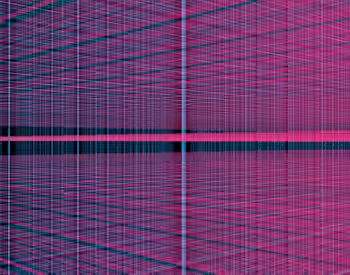

A timeline of the next steps that in-scope companies must take is set out below (actions that Ofcom will take are highlighted pink; actions for companies are in green).

For advice on how the Act may impact your business, or for guidance on compliance with the OSA, please contact John Brunning and Nicola Margiotta.

With thanks to Katrina Angliss, Lucy McKenzie, Jonathan Peters and Harrison Cunningham for their assistance with this article.